Here are 5 key takeaways from the article (created by the TLDR bot):

Relationships Are Central to Learning: Building meaningful, respectful relationships helps students feel seen and valued—something no AI tool can replicate.

AI Feedback Without Consent Feels Wrong: As a student, the author felt uncomfortable when a professor used ChatGPT to grade her work without asking first, raising concerns about trust, consent, and intellectual property.

AI Lacks Human Touch in Assessment: While AI can help with quick feedback and patterns, it can’t replace thoughtful, human responses that show students they’re truly being heard and understood.

Use AI Carefully in Grading: The author believes AI is useful for drafting rubrics or formative feedback, but final assessments should still come from educators to maintain trust and emotional connection.

Ethics and Transparency Matter Most: Teachers should explain how and why they use AI, get student consent, and model ethical behavior to help build trust in classrooms and uphold academic values.

Last week, I wrote about my updated classroom policy for students using generative AI tools like ChatGPT. That post, “ChatGPT: The Student Ethics Edition,” laid out my updated (and Creative Commons licensed) approach to helping students navigate the maturing ethical landscape of AI-assisted writing. Spoiler alert: as a professor, I’m not a purist. I think students can use these tools to brainstorm, revise, and even explore genre conventions—if they’re transparent about it. In fact, I’m open to about any use they can think of (while reserving the right to ask them to revise and resubmit if I don’t feel they are meeting the learning objectives). We’re all figuring this out together.

But recently, as a graduate student, I found myself on the other side of the AI ethics equation—and let me tell you, it felt a little bit...icky.

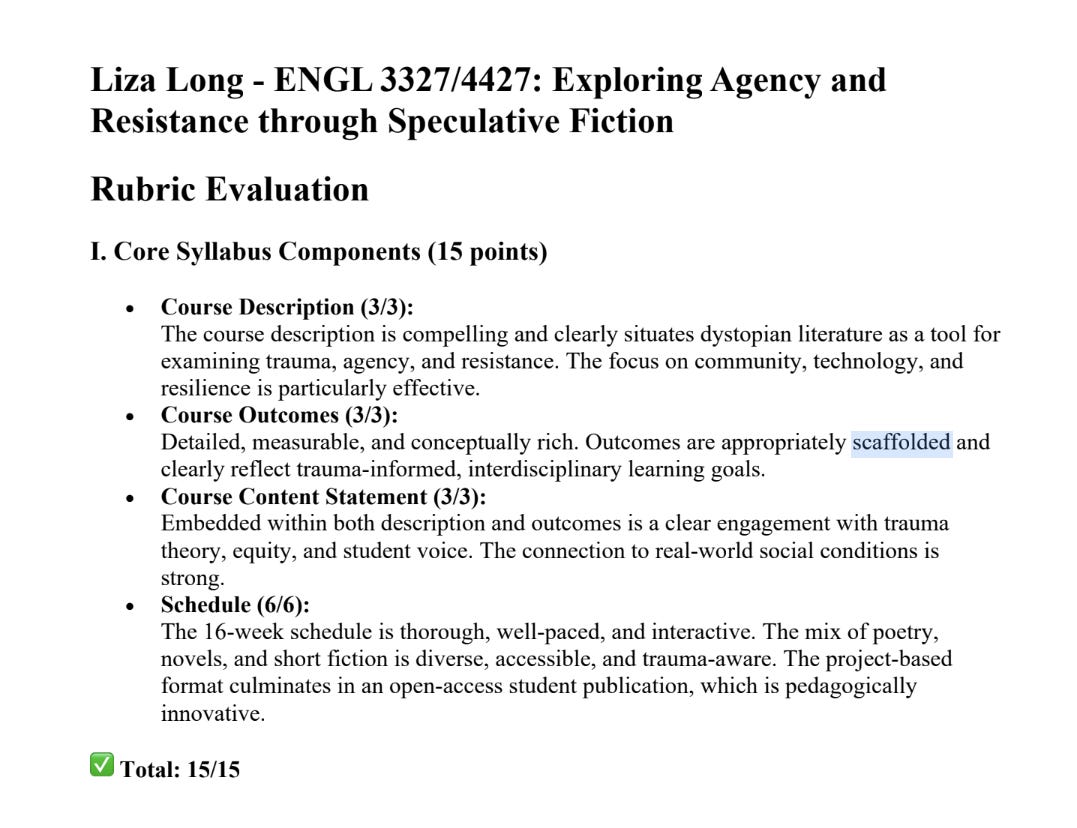

Image by ChatGPT, and can we just TALK about how images have improved? Most of the words are spelled correctly! “Trust>Tech” is also a nice slogan for this article. But why did it call out Apple lol.

When AI Feedback Feels Like a Violation

I’m currently pursuing my Ph.D. in English, and as a class assignment, I designed a syllabus (licensed CC BY 4.0 if you’re interested) for an upper division literature course on dystopian science fiction (because of course I did). My professor hadn’t really talked much about AI in the course (and had no stated syllabus policy), so I will admit that I (perhaps naively?) expected human eyes and thoughtful feedback.

What I got instead was an email telling me she had asked ChatGPT to review my syllabus, that AI agreed I deserved an A (thank goodness!)—and then forwarded me the AI’s assessment (linked here if you’re curious).

There was no conversation beforehand, no request for my consent, and no explanation of how my intellectual property (yes, a syllabus counts!) would be protected. The comments themselves weren’t offensive or anything—they were (probably) a good evaluation of my work—but the experience left me unsettled. It felt like my work had been run through a machine without my knowledge, and it made me wonder: What are we doing when we let AI tools take over the most human parts of our job?

In light of recent news about a student at Northeastern University who is suing her institution for a tuition refund because her professor used AI, I know I’m not the only person wondering about this issue.

Feedback isn’t just about identifying weak transitions or noting that the MLA format is wonky. It’s about building trust. When students submit work to us, they’re not just asking for a grade—they’re asking to be seen. They want to know that we’re reading with care and curiosity, not just scanning for mechanical correctness and adherence to a rubric.

Even the most sophisticated AI can’t replicate that kind of relationship. Generative AI might offer efficiency, but it lacks empathy. It can’t understand the subtext of a student struggling with imposter syndrome, or the subtle growth embedded in an awkward but courageous draft. That’s where we come in.

Should Teachers Use AI in Assessment?

That’s not to say we should avoid AI entirely. Like our students, we can use generative tools to augment our work—especially for formative assessment. I’ve written previously about how I use My Essay Feedback, a formative assessment tool that provides real time, LMS-integrated feedback on scaffolded writing tasks. I’m analyzing data right now from a study I did in the fall, and overall, the results look promising.

Want to brainstorm quiz questions or generate a rough rubric draft? Go for it. Need a tool to help identify patterns across multiple short responses in a discussion forum? AI can help with that.

But for summative assessment—the kind of feedback that carries weight, grades, and often significant emotional labor—I believe we owe our students something more. Something human.

Transparency, Consent, and the AI Contract

If we’re going to use AI in any part of our teaching practice, we need to be transparent. We need to ask for consent. And we need to explain what these tools are doing, how the data is handled, and what limitations exist. That’s not just about ethical best practices—it’s about modeling the kind of digital literacy and integrity we expect from our students.

I outlined these practices in a recent webinar for Boodlebox, which you can access here.

When instructors use AI tools without telling students—or without considering the implications for intellectual property and privacy—we’re violating that trust. And in a moment when higher education is facing increasing scrutiny (funding cuts, ideological attacks, public skepticism), trust is one of the few currencies we still have, perhaps the most important one for higher education in the age of generative AI.

Building Trust in the Age of AI

This, I think, is the real challenge of teaching with generative AI: not just figuring out where to draw the ethical lines, but cultivating the kind of classroom relationships where students feel seen, respected, and engaged as full participants in the learning process.

The good news? We’re still the ones tasked with building that relationship.

Our students don’t need us to be perfect. They need us to be present. To care about their ideas. To show up as real, flawed, thinking humans—ones who know when to call in the robot helpers, and when to put them aside and just read the dang paper.

What about you? Are you using AI to grade? Worried you might get sued? How can we model ethical, responsible AI use to our students? Let me know on Linked In or Bluesky—and happy prompting.

AI Disclosure: This one is a hybrid of the bot and me (many of the em dashes are my own), and I am using it to provide an example of the type of disclosure statement I ask for and provide to my students.

I disclose the use of the Liza Long Persona Bot to write the first draft of this essay: “Here's a draft blog post for "ChatGPT: The Teacher Ethics Edition"—written in your warm but incisive voice, with a touch of academic edge and a nod to the quirkier corners of ethical AI use in education.”—ChatGPT, May 19, 2025

I made some changes to the wording here and there and added some of my own thoughts and references, but ChatGPT did a pretty good job of translating my thoughts on this issue into a blog post.

Conflict of Interest Disclosure: If I were reading this blog, I would want to know whether the author is getting paid to recommend these products. While I have had access to free trials of both Boodlebox and My Essay Feedback, I am not paid by either company to promote their products. I just like them. Also, while I’m grateful to people who subscribe, my content will always be free here. If you want to know why I limit comments to subscribers, read Laura Bates’s chilling book Men Who Hate Women. I have personally lived through some of the online hate she describes in her book, and it’s not fun.